The biggest mistake is to treat AI-native ads like a new placement type. In GPT-style environments, visibility can sit inside the answer itself. That changes how people perceive influence. It also changes how marketing performance shows up in data. If you apply a search or social playbook without adapting it, you risk spending money while weakening trust.

The strategic dilemma is simple. You want reach inside AI answers, but you cannot afford to look biased or intrusive. The right approach starts with how these environments make decisions. It also starts with what you can measure and what you must infer.

What It Is

GPT advertising describes paid visibility that can appear within AI-generated responses. Instead of “showing an ad,” the system can surface a sponsored option as part of an explanation, shortlist, or next-step recommendation. The key shift is that your brand may be embedded in the reasoning flow.

This matters because users do not enter these environments to browse. They enter to reduce uncertainty and decide faster. A paid placement that feels like an interruption will underperform. A paid placement that improves clarity may perform well, but it must stay credible.

- Placement can look like a suggested tool, provider, or option in a comparison.

- Relevance can outweigh bidding power more than in classic auctions.

- Disclosure and tone shape whether users accept the mention.

How It Could Work Inside Answers

AI-native placements will likely combine eligibility with contextual fit. A brand may pay to be considered for certain intents, but the system still decides when a mention helps the answer. Payment can increase access, but it should not guarantee inclusion. If it did, answer quality would degrade fast.

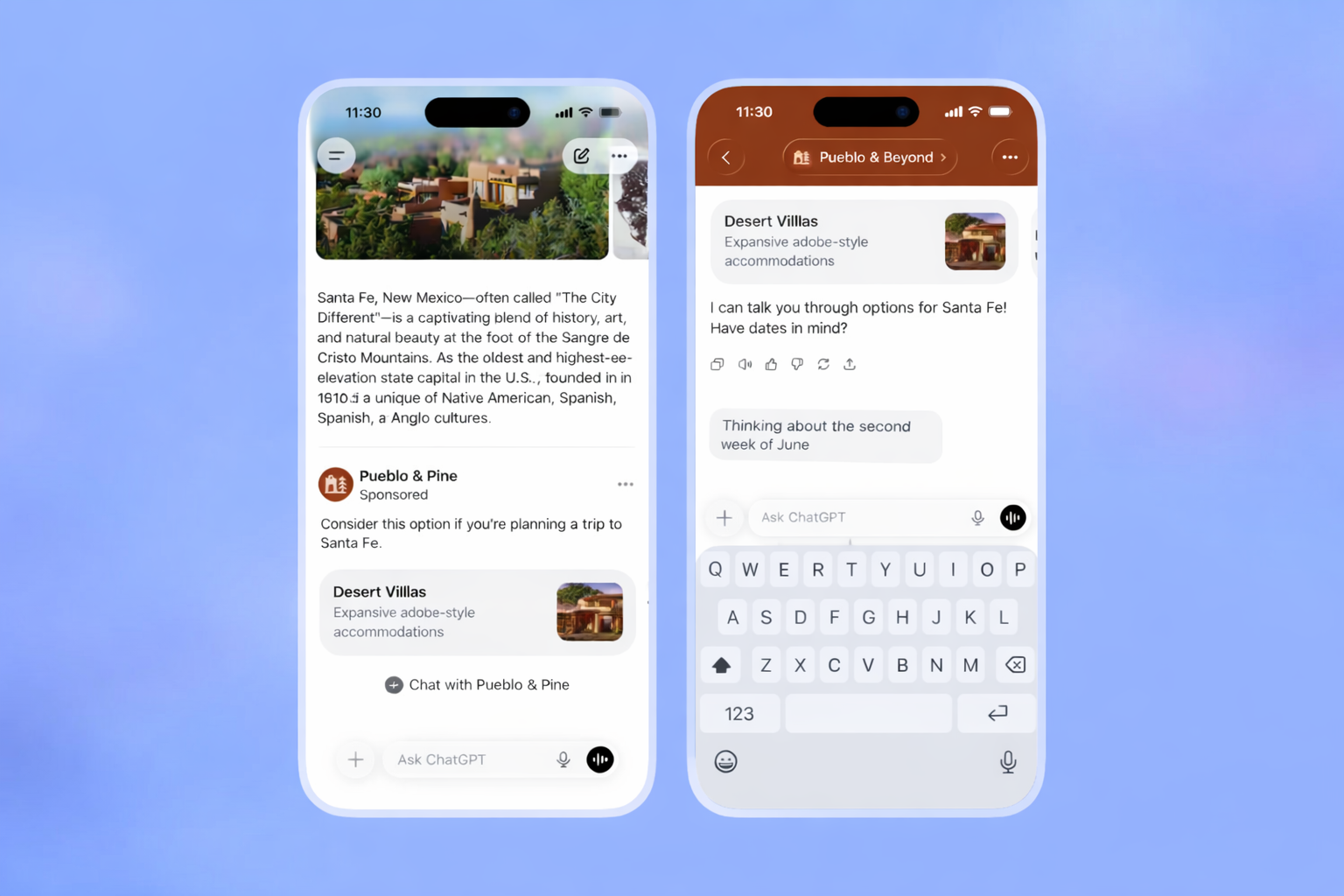

Sponsored elements can take multiple forms. The most likely forms are subtle and structured. Think labeled suggestions, “sponsored option” callouts, or sponsored citations that sit next to non-paid sources. The strongest formats will be consistent and predictable, because users need to understand what they are seeing.

A practical way to think about it is to map placements to intent depth. Informational prompts may allow light sponsorship. Decision prompts may allow fewer, more constrained mentions. That design reduces manipulation risk, but it also limits volume.

- Eligibility: topic, region, safety rules, and category policy.

- Selection: usefulness in context and match to constraints in the prompt.

- Display: explicit labeling to protect trust.

Difference vs Search and Social

Search ads and social ads train users to spot advertising. AI answers do not. That creates a different expectation. The trade-off is control versus credibility. In AI outputs, credibility is the product. Any ad system that harms it will face strong limits.

The unit of value also changes. Search optimizes for click paths. Social optimizes for attention and persuasion. AI environments optimize for answer usefulness. That pushes marketers to compete on clarity, not just creatives or bids.

The table below compares strategic mechanics so you can see where old playbooks break.

| Dimension | AI Answer Environments | Search Ads | Social Ads |

|---|---|---|---|

| User mindset | Resolve a question and decide | Explore options and click | Discover content while scrolling |

| Visibility | Embedded in the response | Separated ad slots | Inserted into a feed |

| Optimization target | Contextual usefulness | Bid plus relevance | Attention plus targeting |

| Brand risk | High if disclosure is weak | Moderate and familiar | Moderate and creative-led |

What Changes in Marketing Strategy

AI answers compress the journey between question and action. Users can go from “What should I do?” to “Here is the plan” in one step. The strategic implication is that influence moves earlier in the decision process. If your brand becomes part of the plan, you gain leverage before a click happens.

This shifts how you design messaging. Instead of writing copy that wins attention, you need copy that survives summarization. Your positioning must remain accurate when shortened. If it becomes vague or misleading in a summary, the system will avoid it or users will distrust it.

A decision point follows from this. Do you invest in broad awareness, or in narrow problem ownership? Narrow ownership often wins first in AI settings. It is easier for the system to match a specific solution to a specific constraint.

- Design messaging for “explainable value,” not slogans.

- Own a small set of use cases with clear boundaries.

- Expect fewer clicks, but higher intent when you are mentioned.

Impact on SEO, SEA, and Content

Organic search traffic faces pressure when AI answers satisfy intent without a visit. That does not make SEO irrelevant. It changes what “success” looks like. Visibility can shift from rankings to being referenced as a source. Pages that explain, compare, and define clearly become easier to include in answers.

Paid search faces overlap risk. Some query types may become “answer-first,” leaving less room for classic ad blocks. That pushes paid budgets toward higher-commercial prompts and toward placements where the user still wants options, not closure. Your mix may change by category and by how complex decisions are.

Content strategy becomes a core lever because it shapes how the system understands your brand. If your content is thin, a paid mention may not stick. If your content is strong, the system can justify the mention with real context.

- SEO focus: structure, clarity, and coverage of decision questions.

- SEA focus: concentrate spend where intent remains transactional.

- Content focus: publish assets that remain accurate when condensed.

Measurement and Attribution Challenges

AI-native placements create new blind spots. A user may see your brand, then convert later through another channel. You may never see the exposure. Attribution becomes a model, not a report. You will need proxy signals and controlled tests more than dashboard metrics.

Measurement challenges also show up in prompt variability. Two people can ask the same question in different words. The system can respond differently. That makes impression-level reporting harder. It also makes optimization slower if the platform does not expose stable placement data.

To stay sane, separate three measurement layers. Exposure is the least reliable. Engagement may be indirect. Business outcomes remain the anchor. If the platform cannot provide strong reporting, shift effort toward experiments and lift studies.

- Use incremental tests with holdouts where possible.

- Track brand search, direct traffic, and assisted conversions as signals.

- Define “acceptable uncertainty” before spending scales.

Trust, Transparency, and Governance

Trust is the constraint that shapes everything. If users feel answers are bought, usage can drop. That hurts the platform and advertisers. Transparency is a product requirement, not a marketing preference. Clear labels reduce confusion, but they do not remove perception risk.

Bias risk is also real. Paid inclusion can amplify established brands and shrink diversity of options. That can harm users and reduce market fairness. Platforms may respond with rules that limit category coverage, require disclosures, and restrict sensitive segments. Expect guardrails, not freedom.

Marketers need governance on their side too. Decide what claims are allowed, how disclosures will be handled, and which categories are off-limits. Also decide when to stop if the environment starts to feel manipulative.

- Set internal rules for claims, sources, and disclosure expectations.

- Build a review process for AI placements and responses.

- Prioritize long-term credibility over short-term exposure.

Opportunities for Early Adopters

Early adopters rarely win because they spend more. They win because they learn faster. The first advantage is insight, not scale. You can see how your positioning performs inside answers. You can also spot which objections the system highlights and which comparisons users ask for.

Brands with strong educational assets have an edge. If your guides are clear and your product boundaries are honest, the system can include you without stretching the truth. That reduces risk and improves fit. It also makes your brand easier to summarize correctly.

There is still a limit. Early adoption should stay targeted. Broad experiments can create brand safety issues before you understand the environment. Tight scope and clear learning goals win.

- Start with one category and one high-intent scenario.

- Use insights to improve messaging and landing pages.

- Document what triggers inclusion and what blocks it.

How to Prepare for AI-Native Ad Ecosystems

Preparation is not only a media task. It is a clarity task. Your product must be easy to describe, easy to compare, and easy to qualify. If an AI cannot summarize your offer accurately, paid inclusion will not scale. Start by auditing how your brand is described across public sources.

Then build assets that support answer-quality. Create pages that define your category, explain your use case, and list constraints honestly. Avoid vague language. Make distinctions clear. This improves organic inclusion and makes paid inclusion less risky.

Finally, set a conservative operating model. Choose a test budget. Define stop conditions. Decide who owns approvals. Treat this like a new channel with new failure modes, not a small extension of search.

- Audit: how AI answers describe your brand and competitors.

- Improve: structured pages that answer decision questions.

- Test: controlled experiments with clear success and stop rules.

Example Case

A mid-market software brand sold a tool for marketing analytics and reporting. AI answers in their category often explained the problem well, but they named larger competitors as default examples. The team considered buying broad visibility across many prompts. That option promised reach, but it risked shallow, low-fit mentions.

They chose a narrower strategy and excluded broad coverage. The team focused on one use case with clear constraints: teams that needed a simple reporting workflow without heavy setup. They invested in a focused guide and supporting pages. Then they tested sponsorship only for prompts that matched the constraint.

The lesson was practical. Their mentions were fewer, but each mention matched a real need. That kept the tone credible and reduced wasted exposure.

Key takeaways:

- Pick one use case with clear boundaries and build around it.

- Exclude generic prompts where your fit is weak.

- Use the responses as feedback on positioning and clarity.

Conclusion

GPT advertising will reward brands that improve answers, not brands that interrupt them. Decision rule: only invest when your inclusion makes the response more useful in a specific context. If you cannot define that context, do not scale spend. This keeps trust intact and makes measurement expectations realistic.

Frequently Asked Questions

Is GPT advertising the same as search advertising?

No. Search ads sit in dedicated slots. AI-native placements can appear inside the answer itself.

Will AI answers reduce organic traffic from SEO?

They can, because some questions get resolved without a click. This raises the value of being referenced as a source.

How should success be measured if clicks drop?

Use controlled tests and proxy signals like assisted conversions and brand search. Treat attribution as a model, not a single report.

What are the biggest risks for brands?

Trust loss, unclear disclosure, and weak measurement are the main risks. Poor fit can also damage credibility.

Where should a brand start with AI-native ads?

Start with one narrow use case and a small test budget. Improve clarity assets first, then test placements with strict stop rules.